The Marketing Mix Model is a popular business tool designed to quantify marketing Return on Investment (ROI), guide the optimal allocation of marketing resources and inform sales forecasting. The foundations of modern Marketing Mix Modeling (MMM) comprise four main pillars.

1. Microeconomic consumer theory

Successful MMM requires a solid understanding of the product demand curve. At its most basic, this is defined as the relationship between quantity demanded and price. In perfectly competitive markets, price conveys all the information consumers need. In imperfectly competitive markets, however, product differentiation takes on a key role, driven by a range of non-price related marketing strategies. In this sense, marketing mix models are effectively price demand curves, augmented with media and economic variables that act to shift the demand curve in price-quantity space.

Single equation forms

The standard mix model takes the following ‘single-equation’ form:

$Sales_{it} = \alpha_i +T_i +\theta_{it} + \gamma_{ij}P_{ijt} +\sum_{k=1}^K\beta_{ijk}M_{kijt} +\sum_{k=1}^K\delta_kD_{kt} + \varepsilon_{it}$ (1)

Sales of product i over time t are a function of a base level αi, trend or drift term (Ti), a seasonal index (θi), price Pi, competitor prices Pj, own and competitor marketing variables Mkij (with lags or Adstocked as required) and economic driver variables Dk. For the purposes of budget allocation, concave response functions (diminishing returns) are typically incorporated. The generalised additive model is widely used, with non-linear transforms applied directly to the marketing variables. Alternatively, the multiplicative form is a popular choice incorporating both non-linear relationships and synergies between the variables.

Demand system forms

If the focus is on one product at a time, equation (1) is a suitable approximation. However, it is often applied to groups of competing products and sold as ‘category’ modelling. This is invalid. For example, it is perfectly possible that total volume gains across the group are either greater than or less than total volume losses. Whereas this is typically interpreted as category growth or shrinkage respectively, it is mainly a consequence of the fact that sets of single equations are unrelated and do not ‘add up’ (Deaton and Muellbauer, 1980).

To overcome this problem, a more accurate representation of underlying microeconomic utility theory is required where consumers choose between competing products based on price, marketing and an income (budget) constraint. This leads to simultaneous-equation demand-system approaches that treat the defined category as a single unit, delivering consistent estimates of gains and losses together with meaningful category expansion effects of brand-specific marketing. This distinction is important if manufacturers are seeking empirical evidence to justify increased shelf-space in-store for example, thereby facilitating the manufacturer-retailer relationship. The result is more accurate price elasticities, marketing ROI and budget allocation. (Read More).

2. Purchase journeys and causality

With the advent of multi-channel marketing, the foundations of modern mix models need to reflect the full off and online consumer path to purchase. The typical focus is on the inter-related role of paid, owned and earned media, where traditional marketing investments stimulate a cycle that starts with natural online search, continuing through to website research and finally onto online and offline sales. This expands equation (1) into a network model of the off and online consumer journey. (Read More).

Consumer journey networks represent simultaneous equation systems, where current-period dependent (endogenous) variables also appear as independent variables. Endogenous independent regressors are correlated with the model error term and violate the assumptions of the marketing mix model. This leads to endogeneity bias, where model parameters predominantly reflect simple correlations rather than meaningful causal relationships. Under these circumstances, more advanced econometric techniques are required, where the causal structure is modelled subject to chosen identification schemes.

3. Brand building

In order to successfully explain both call-to-action and brand-building aspects of marketing strategy, it is critical that models are set up to differentiate between short and long-term demand. This is ignored in standard mix modelling, which is essentially short-term focused. To address this, flexible time series forms of equation (1) are required such as the Unobserved Component Model (UCM).

$Sales_{it} = \mu_{it} +\theta_{it} + \gamma_{ij}P_{ijt} +\sum_{k=1}^K\beta_{ijk}M_{kijt} +\sum_{k=1}^K\delta_kD_{kt} + \varepsilon_{it}$ (1a)

$\mu_{it} = \mu_{it-1} + \lambda_{it-1} +\eta_{it}$ (1b)

$\lambda_{it} = \lambda_{it-1}+\zeta_{it}$ (1c)

$\theta_{it} = -\sum_{j=1}^{p-1}\theta_{it-j}+\kappa_{it}$ (1d)

The intercept αi in equation (1) is replaced with a time varying (stochastic) trend μit comprising two components described by equations 1(b) and (c). Equation 1(a) allows the underlying sales level to follow a random walk with a growth factor λi, analogous to the conventional trend term Ti. Equation 2(b) allows λi to follow a random walk. Depending on the estimated values of the covariance variances $\eta_{it}$ and $\zeta_{it}$, the system can accommodate both stationary and non-stationary product demand allowing the data to decide between them. Equation 1(d) specifies seasonal effects, which are constrained to sum to zero over any one year. If $\kappa_{it}$ is zero, then seasonality is deterministic.

Model 1(a)-(d) allows a direct separation of demand behaviour into short and long-run features. The long-term component can then be analysed with dynamic long-term network systems such as the Vector Error Correction Model (VECM) to quantify long-term brand-building behaviour (Read More).

4. Heterogeneity

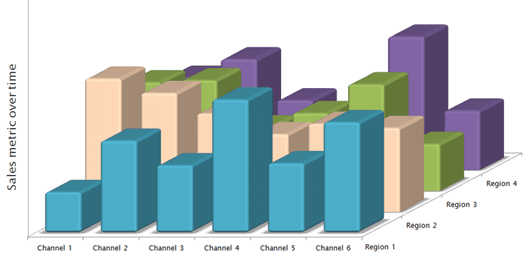

A central feature of demand behaviour is the high degree of heterogeneity that exists across individual consumers. A key foundation of modern MMM analysis is the ability to incorporate such differences into the modelling process. This leads to disaggregated ‘longitudinal’ data sets such as illustrated in Figure 1, where all dimensions or ‘cross-sections’ are modelled simultaneously. Typically, we are seeking to quantify cross sectional deviations from the average response, helping to improve targeting of marketing investments.

Figure 1: Longitudinal data structure

In standard disaggregated modelling, equation (1) is essentially ‘stacked’ across all cross-sectional units and written in compact form as follows:

$y_{it} = x_{it}\beta_i + \varepsilon_{it}$ (2)

Where i = 1-N denotes the defined cross-sectional unit such as product, store, chain and region, yit, denotes a vector of dependent variables for product or brand sales, xit denotes a row vector of K explanatory variables for cross-section i, βi is a K-vector of response coefficients and εit represents a vector of error terms.

Equation (2) is the panel data analogue of equation (1), where the intercept in each cross-section represents a ‘fixed’ effect to account for unobserved (time-invariant) cross-sectional differences. Allowing these terms to evolve as per equations 1(b) and 1(c) gives a dynamic unobserved component panel or Seemingly Unrelated Time Series Equation (SUTSE) model.

Correct estimation of the marketing response parameters βi in (2) depends on the properties of the error structure, both within and across cross-sections. Classical Ordinary Least Squares (OLS) requires that the error covariance matrix of the ith cross section satisfies the standard assumptions of constant variance and zero serial correlation:

$E(\varepsilon_{it}\varepsilon_{it}^{'}) = \sigma_i^2I_T = Π_i$ (3)

With constant variance and zero contemporaneous error correlation across cross-sections:

$\begin{pmatrix} Π_1 & 0 & 0... & 0\\0 & Π_2 & 0... & 0\\ ... & ... & ... & ...\\... & ... & ... & ...\\0 & 0 & 0...&Π_N \end{pmatrix}$ (4)

Ordinary Least Squares (OLS) estimation of (2) provides the coefficient vector βi, with marketing response estimates specific to each cross-section i. In circumstances where (3) and (4) do not hold, such as in the presence of heteroscedastic and/or autocorrelated errors, Feasible Generalised Least Squares (FGLS) approaches are typically applied. This essentially uses OLS to estimate the relevant error structures and transform the data such that (3) and (4) are then applicable.

Big Data methods

As modern data sets burgeon in size, obtaining reliable detailed marketing response information can be challenging. Full interaction time series-cross sectional approaches such as model (2) are often unstable as the number of dimensions and parameters increase, delivering many zero and/or incorrectly signed effects. Consequently, alternative methods are required to handle increasing data size and granularity that obviate the need for aggregation over product and consumer dimensions.

Classical pooling

The most basic approach is to pool the data. This provides a single average response coefficient β for each relevant explanatory variable in model (2). The downside is that this ignores response heterogeneity over cross-sections and is of little use to media planners and budget holders seeking guidance on media targeting at regional level for example. This can be remedied by regional pooling, but at the cost of increasing the number of parameters whilst still imposing homogeneity across products and consumers.

Hierarchical Bayes

A more flexible technique is to introduce random coefficients in the form of a Hierarchical Bayesian structure. Equation (2) is the typical structure of the classical or frequentist approach to statistics, where the model parameters are regarded as unknown fixed quantities to be estimated from the data. In the Bayesian approach, parameters are viewed as unknown outcomes of a random process determined by another higher level joint distribution. In the context of Figure 1, this assumes that each cross-sectional coefficient is drawn from a population distribution shared by all the cross-sections (exchangeability). This is a strong assumption, but leads to a dramatic reduction in the number of estimated parameters. For example, consider model (2) re-written as follows:

$y_{it} = x_{it}\beta_i + \varepsilon_{it}$ (2a)

$\beta_i=\beta + v_i$ (2b)

$y_{it} = x_{it}\beta + u_{it}$ (2c)

$u_{it} = x_{it}v_i +\varepsilon_{it}$ (2d)

Where equation 2(a) represents each cross-sectional (micro) model and equation 2(b) represents a higher level (macro) distribution for coefficients βi with mean β and error $v_i$ - normally distributed with mean zero and variance $Γ$. It is this micro and macro view that gives the model its hierarchical structure. Combining 2(a) and 2(b) leads to model 2(c), with composite error term 2(d). Covariance matrix (3) then becomes:

$E(u_{it}u_{it}^{'}) = E[(x_{it}v_i +\varepsilon_{it}) (x_{it}v_i +\varepsilon_{it})^{'}] = \sigma_i^2I_T +x_tΓx_t^{'} =Π_i$ (5)

The error covariance matrix of each cross-section is now a function of both the variance ($\sigma_i^2$) and the parameter spread over cross-sections ($Γ$). The balance between the two determines the predicted βi coefficients and reveals the distinctly Bayesian nature of the approach. A high value of $\sigma_i^2$ relative to $Γ$ implies relatively imprecise cross-sectional estimates. Consequently, the cross-sectional data have little to offer and the individual βi values are shrunk towards the pooled (prior) mean value. Conversely, where $\sigma_i^2$ is low relative to $Γ$, so the sample data are more informative and the cross-sectional specific estimates dominate with minimal shrinkage. In this way, the Hierarchical Bayesian coefficients are essentially weighted averages of the pooled and cross-sectional estimates.

The random coefficient model is often called Empirical Bayes, since the prior mean and variance of the parameter distribution are derived as fixed point estimates from the data itself. This neglects a source of uncertainty. More standard Bayesian approaches incorporate this uncertainty through priors set independently of the data and represent an increasingly popular method of introducing user-control into marketing mix modelling. A prior distribution for β allows us to set the mean value of the macro distribution to externally given values. This is particularly useful if we wish to constrain parameters to be positive or negative and/or set values consistent with previous studies. A prior distribution for the coefficient covariance matrix then allow control over the degree of shrinkage around the given mean.

Attribute based models

An alternative approach to dimension reduction is based on the economics of how consumers shop for products. Marketing mix models are based on conventional microeconomic demand theory, where consumer preferences are defined over the individual products themselves. However, an alternative approach defines preferences across higher level product attributes and characteristics (Lancaster, 1966). For example, the television category can be divided into brand, screen size and technology and further divided into brand name, dimensions and LCD/LED/Plasma/3D. Provided that there is a sufficient level of commonality in attributes and characteristics across the category, a complete product (SKU) level data set can be fully described over a significantly reduced number of dimensions. A good example of this approach can be found in Fader and Hardie (1996).

To talk to one of our experts about your marketing measurement needs, please contact us.

“Excellent blog post! The content is engaging, informative, and well-researched. I appreciate the valuable insights shared. Looking forward to reading more from you!”